This poster was created by Kyle Cranmer and me. It is about the tools we built and were part of the discovery of the Higgs boson. It’s from a while ago, but it needs more exposure as the work behind it is applicable outside of physics, but largely unknown.

PDF of the poster.

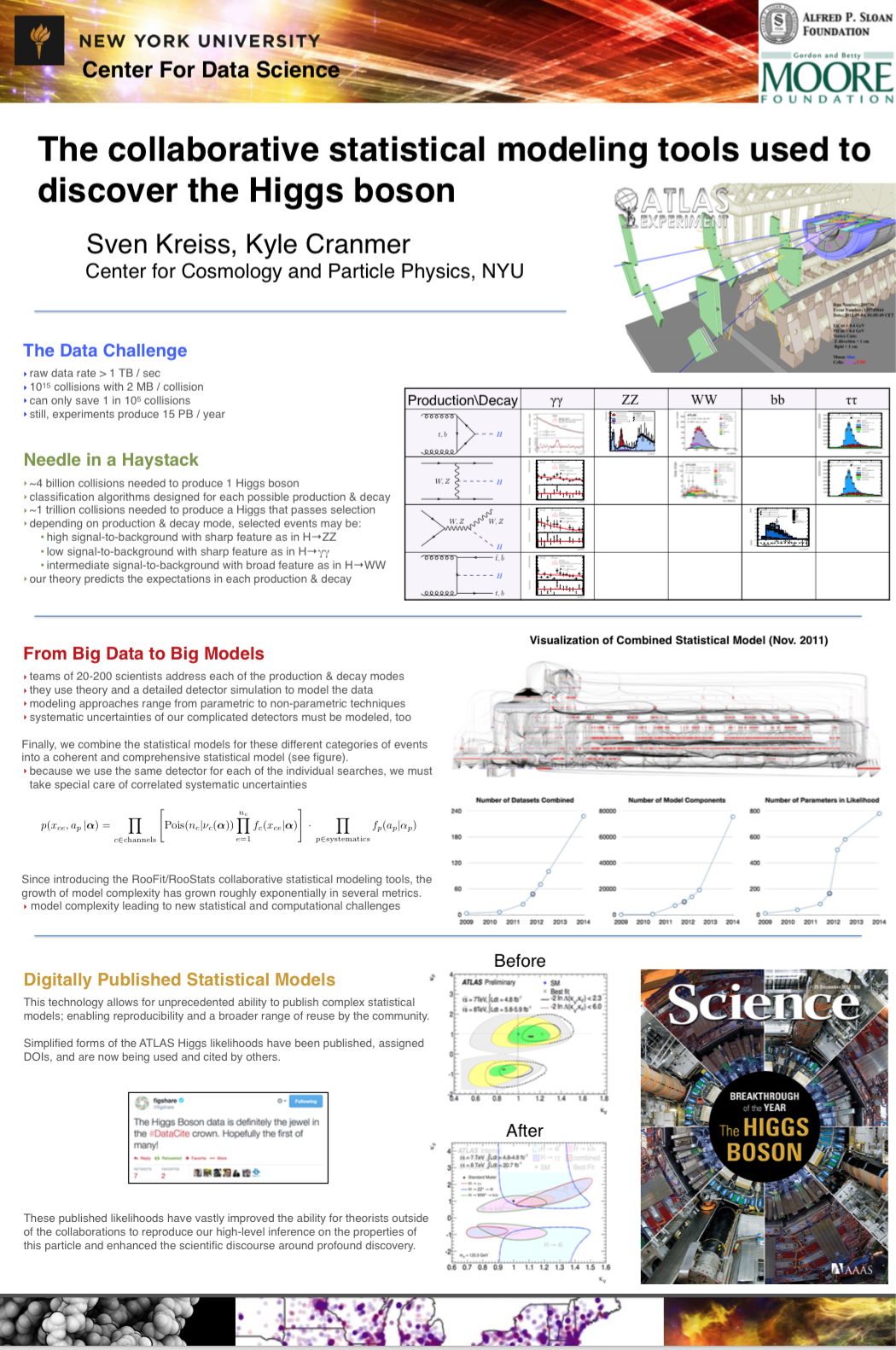

The Higgs group of the ATLAS experiment (one of the two large experiments at CERN) has a few hundred members working in seven subgroups. The final statistical test to claim the discovery is done with a combined statistical model with input models from all subgroups, and in addition models from detector performance groups and theoretical models from outside the Higgs group. It is based on statistical methods and technical innovation that deserves more attention. Outside of particle physics, this is a topic that is gaining interest, but people are unaware of the experience and technology in particle physics.

The important part is the separation of model and method. The way collaborative statistical modeling works at ATLAS is that it is really just the way how models are built, investigated and debugged. The methods (inference, generation, confidence intervals, credibility intervals, posterior probabilities, hypothesis tests, ...) are done by tools that take a model as input. Any method — no matter whether Frequentist or Bayesian — can be applied to any model.

Links: